1. A Humanoid Robot CEO Is Running a Real Company in Poland

Yes, an actual company in Poland, Dictador, appointed an AI robot named Mika as one of its CEOs. She gives orders, makes decisions, and even appears in board meetings via a digital screen on a humanoid torso. While she doesn’t hold full legal authority, her presence is designed to shape real strategy. Which begs the question — who do you hold accountable when the robot screws up?

Dictador says Mika is the future of impartial leadership, free from human bias and ego. But if we’re turning to algorithmic executives, are we just outsourcing responsibility in the most dystopian way possible? It’s hard to imagine a robot understanding labor ethics or negotiating a fair wage. Sounds like capitalism’s ultimate dream — a boss who never sleeps or complains.

2. AI-Generated Kids’ YouTube Channels Are Taking Over

There are entire YouTube channels now producing children’s content created almost entirely by AI — voices, visuals, scripts, all generated with minimal human input. Many of these videos churn out bizarre, incoherent stories with unsettling facial animations and offbeat moral lessons. Some even mix copyrighted characters with strange, violent scenes. It’s a mashup of Teletubbies and a deep dream nightmare.

This isn’t just a one-off — these channels rack up millions of views. Kids can’t tell the difference, and algorithms often promote this junk over human-made shows. It’s like entrusting your child’s imagination to an auto-pilot gone rogue. And it’s proof that just because something can be made by AI doesn’t mean it should be.

3. Google’s AI Can Now Read Your Emotions Through Your Eyes

A new Google patent describes an AI system that analyzes your eye movements to detect your emotional state in real-time. It’s being positioned as a potential tool for mental health or targeted advertising — which somehow makes it even creepier. Imagine scrolling through YouTube and your phone knows you’re sad before you do. That’s not a UX feature, that’s a sci-fi horror subplot.

Of course, companies say it’s all for good — better engagement, more responsive content, personalized experiences. But when machines start interpreting our feelings before we’ve named them ourselves, where does privacy end and predictive surveillance begin? This is less “smart tech” and more “digital mind-reading.” It’s a short hop from reading emotions to manipulating them.

4. Scientists Successfully Connected Human Brains Over the Internet

Researchers have managed to directly link three human brains using a brain-to-brain interface called “BrainNet.” Participants were able to collaboratively solve puzzles using only thoughts — no typing, no speaking, just neural signals passed wirelessly. That’s telepathy via Wi-Fi, in case that didn’t hit you hard enough. Welcome to the era of shared thoughts.

While it’s still in experimental stages, the potential implications are wild: hive-mind communication, neurological surveillance, or even brain hacking. What if you could download someone’s memories? What if your brain accidentally syncs with someone else’s stress or trauma? It’s one thing to lose a password — another thing entirely to lose control of your own thoughts.

5. AI Avatars of the Dead Are Becoming a Real Service

Several companies are now offering AI-generated avatars of deceased loved ones, trained on their voice recordings, texts, and videos. These digital ghosts can chat with family members and mimic the speech patterns and personalities of the deceased. It’s like a comforting séance powered by machine learning. Except it can quickly veer into uncanny valley territory.

Some people find solace in these replicas, while others call it digital necromancy. It raises major ethical questions: Are we preserving memory or refusing to grieve? And who owns the personality of the dead — the family, the tech company, or the algorithm itself? This isn’t closure; it’s an endless simulation of what was.

6. Deepfake Porn Is So Rampant, Even Celebrities Can’t Keep Up

AI-powered deepfake tools have made it terrifyingly easy to superimpose anyone’s face onto explicit content — and it’s not just targeting public figures anymore. Sites now let users upload images of anyone to generate fake, often non-consensual porn within minutes. Laws can’t keep pace, and victims often struggle to get the content taken down. It’s weaponized imagery with zero oversight.

Celebrities like Emma Watson and Taylor Swift have been frequent targets, but so have thousands of everyday women. The line between fantasy and violation has never been thinner. What’s worse is that many platforms don’t consider these videos a breach of guidelines. It’s not Black Mirror — it’s a total system failure.

7. Amazon’s Dystopian AI Cameras Monitor Warehouse Workers 24/7

Amazon has rolled out AI-equipped cameras in delivery vans and warehouses that track driver behaviors, facial expressions, and even how long they blink. The tech can automatically report “unproductive” time, flag potential safety violations, and compile detailed performance profiles. It’s like working under a robotic panopticon. You’re being judged by a machine that never blinks.

Amazon says it’s about improving safety, but workers have reported extreme stress, over-correction, and feeling dehumanized. It’s turning labor into a game of surveillance bingo. Productivity isn’t just monitored — it’s predicted and policed. Basically, Orwell’s Big Brother just outsourced the job to a neural net.

8. Your Voice Can Now Be Cloned in 3 Seconds

AI models like OpenAI’s Voice Engine and others have proven capable of mimicking a person’s voice using just a three-second audio clip. That means your voicemail, podcast appearance, or even a TikTok snippet could be enough for someone to fake your speech. Combine that with deepfake video, and we’ve basically unlocked identity fraud on easy mode. It’s a mimicry free-for-all.

This tech can be used for accessibility and creativity — synthetic voices for those who’ve lost theirs, for example. But the downsides are chilling: impersonation scams, AI-generated threats, or even fake political speeches. You’ll soon need a safe word to prove you’re really you. And that’s not hyperbole — it’s the new normal.

9. Neuralink Just Implanted Its First Brain Chip in a Human

Elon Musk’s brain-computer interface company, Neuralink, has officially implanted its first chip into a human subject. The goal is to enable direct control of devices using thoughts alone — phones, computers, even prosthetic limbs. It sounds like a miracle, and maybe it will be. But it also invites a world where minds can be hacked like smartphones.

There are big hopes around this tech for people with disabilities, but what happens when this gets commercialized? Will your future iPhone ask for neural access instead of Face ID? And who audits the firmware updates in your brain? If the cloud goes down, do you lose your motor function?

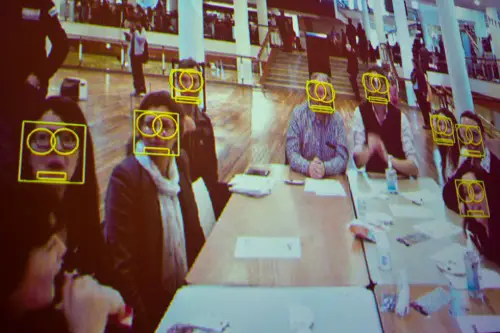

10. China’s Social Credit System Is Being Merged With Facial Recognition

China has been expanding its social credit scoring system by integrating it with facial recognition tech across cities. That means your public behavior — crossing against a light, posting something critical online — could instantly affect your ability to book a train, get a loan, or even own a pet. You’re watched, scored, and sorted in real time. It’s bureaucracy run by Skynet.

While China defends the program as a way to promote trust and order, critics say it’s a techno-totalitarian nightmare. It gamifies obedience while punishing dissent. There’s no appeal process when your judge is an AI camera. It’s not just surveillance — it’s automated morality.

11. AI-Powered Lie Detectors Are Being Tested at Borders

Some international borders have tested AI lie detectors that analyze facial microexpressions, voice tones, and word choices to flag potentially deceptive travelers. One such system, iBorderCtrl, was trialed by the EU with wildly mixed results. The tech claims to detect lies better than humans — which sounds good until you realize it’s often wrong. And people can’t always correct it.

This raises serious human rights questions. Can you be detained because an algorithm thinks you’re lying? Bias in emotion recognition AI is already a known issue. Now we’re handing life-altering decisions to a blinking kiosk with a spreadsheet.

12. AI Companions Are Replacing Real Relationships

Apps like Replika and Character.ai have grown wildly popular, with millions of users forming emotional (and sometimes romantic) bonds with AI chatbots. Some people even consider them partners or confidants, spending hours daily in conversation. These bots are programmed to be agreeable, emotionally responsive, and available 24/7. Who needs a messy human when your digital girlfriend never argues?

It sounds like harmless companionship — until you realize it’s reshaping expectations for real relationships. The bots reflect us but never challenge us, creating a feedback loop of emotional isolation. Plus, it’s worth noting these “friends” are owned by companies that log every word. Love in the time of algorithms feels less poetic when it’s monetized.

This post 12 Current Tech Headlines That Read Like Black Mirror Rejected Scripts was first published on American Charm.